First, let’s define what a robots.txt file is and why you might want to use one. This file is known as the Standard for Robot Exclusion. It’s just a text file located in a site’s root directory, which gives instructions to all bots visiting your site, restricting their access to certain areas.

Of course, that “restriction” is really just a request. It’s like putting a DO NOT ENTER sign on an unlocked door – it will only keep the honest bots honest.

Why Have a robots.txt File?

There are a few reasons why you might want to have a robots.txt file on your site. If your hosting service limits your bandwidth, for instance, you might opt to keep some or all bots from crawling some of your pages. Unless you happen to be a lawyer, you’re probably not interesting in ranking for Terms & Conditions or Privacy Policy. Your Contact Us form scripts won’t do you much good if they show up in the SERPs, either.

You might also want to limit the load on your server. Eliminating calls for site search scripts and contact forms by the various bots that hit your site every day can save server resources. If your server is significantly loaded, lightening the load can be helpful.

If you have images on your site that you don’t want in the SERPs, you can place them in a location that is restricted by your robots.txt. An architect, for instance, might not want his drawings being crawled and indexed. It’s better to simply prevent access to them via the robots.txt file, rather than allowing them all to be called, but assigning a nofollow noindex attribute.

There are other reasons to use a robots.txt, but these are the principal needs. A robots.txt file certainly isn’t required, but in many instances, it can be wise to have one. If you have none at all, then every bot that visits will receive a 404 response code when it calls for the robots.txt, which will consume resources and bandwidth and flood your Webmaster Tools with 404s, cluttering things up quite a bit. Here’s what Matt Cutts had to say about it in 2011:

How to Build a robots.txt File

This is a very simple file to build. First, you’ll need an ASCII text editor. If you’re using a Windows-based machine, you can use Notepad. Do NOT use Word, WordPad or Write! They aren’t ASCII editors and will insert formatting that will make the file invalid for the bots.

The file may start out by calling out your sitemap, with a single-line entry like:

Sitemap: http://yoursite.com/sitemap.xml

Then, it will identify a User Agent. We’ll use Googlebot for our example:

User-agent: Googlebot

The next line will be the directive for Googlebot. If you don’t want to place any restrictions whatsoever, the line will simply be:

Disallow:

which is telling Googlebot to go anywhere on your site that it likes. If you wanted to tell it not to visit any of your site, you would use:

Disallow: /

On the other hand, if you only want it to stay away from the contents of your forms folder, you would use:

Disallow: /forms/

Note the trailing slash to denote that forms is a folder! If it’s a document you’re disallowing, no trailing slash is required.

If you want all bots to stay out of your forms folder, you can substitute Googlebot in the User-agent line with an asterisk, or wildcard. Thus, your entry would appear like this:

User-agent: *

Disallow: /forms/

Unless your instructions are universal restriction (with a wildcard asterisk for User-agent and the same restrictions across the board) these two lines need to be repeated for each bot. So you might have something like this:

User-agent: Googlebot

Disallow:

User-agent: Googlebot-Image

Disallow: /images/

and so on, through the list of different bots you want to address.

There’s another directive you can place, as well – the Crawl-delay directive. This basically just tells the bot how many seconds to delay between requests. If you use this parameter, it will go between the User-agent: and Disallow: lines, thus:

User-agent: Googlebot

Crawl-delay: 10

Disallow:

That’s the basics of a robots.txt file. There are other things that can be done in the robots.txt, but the above will handle most of what you need.

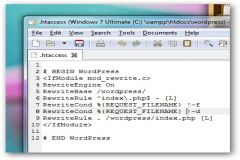

As a footnote, if you’re one of the millions of WordPress sites out there, it’s advisable to disallow all bots from certain files, such as:

User-agent: *

Disallow: /wp-admin

Disallow: /wp-includes

Disallow: /wp-content

Let me stress again that while these are referred to as directives, they’re really better considered as “suggestions”. Bots that are up to no good will usually ignore them and go wherever they like on your site. So this is not a means of protecting information. If you have sensitive files that you don’t want to be accessible, you should handle that at the server level.

Remember too, that even if a page or folder is blocked with the robots.txt, a link to that document provides another means of access – a back door that the bots will follow.

So now you can generate your robots.txt and gain a little more control over your site. Just be certain to check it carefully. Untold horrors can take place, with a site even disappearing entirely if you get it wrong.